Generative AI tools create content such as text, images, audio, and other media based on data from the internet. However, they raise concerns about potential threats. Problems arise when AI-generated content is used to deceive people, spreading misinformation and disinformation at a low cost. Experts warn that this content can mislead the public more easily than content created by humans.

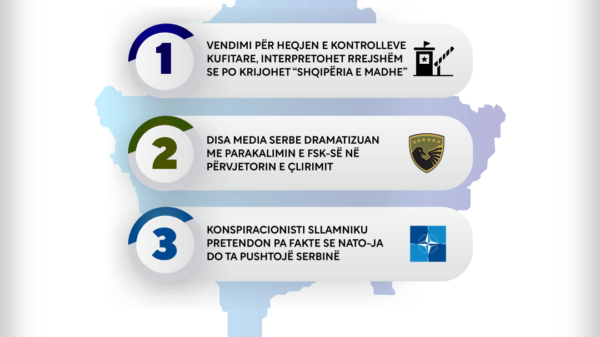

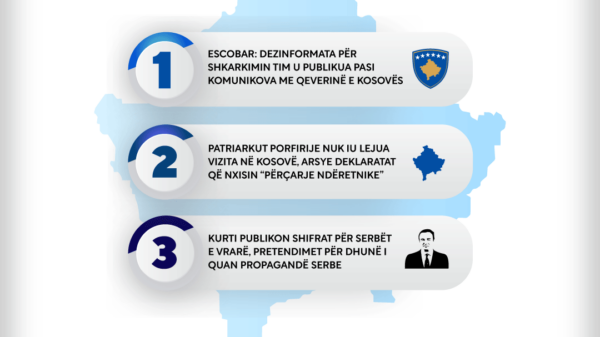

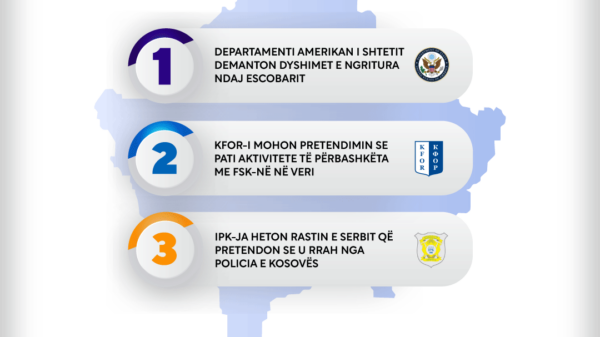

Generative AI tools raise two concerns regarding disinformation: They can "hallucinate" or create texts and images that sound and appear credible but deviate from reality or lack factual basis. Users who are careless or unaware may inadvertently spread these as facts. Additionally, these tools can facilitate the creation and dissemination of deliberate and convincing disinformation, including "deepfakes"—audio, video, and images of events that never actually occurred.

AI Hallucinations as a Cause of Misinformation

Misinformation is not always intentional. We often assume we receive accurate answers from AI-driven chatbots. However, their algorithms can sometimes generate responses that are not based on learned data and do not follow any identifiable patterns. These are known as "hallucinations" in content generation.

Conceptually, hallucination refers to the perception of objects or events that have no real external source—like hearing the name of a person called by a voice that no one else hears. AI hallucinations occur when an AI model produces inadequate or misleading results during data analysis. This phenomenon can be caused by insufficient or contradictory data or by the complexity of the model.

Large Language Models (LLMs) are programmed to answer questions, not necessarily accurately, but simply to respond. They generate answers based on the probability of words following one another and the patterns between words. The arrangement of words in the training data is crucial. If a word with high probability is the correct choice according to the context, only then can large language models provide accurate information.

In a study testing the accuracy of ChatGPT’s responses about the birthplaces of American politicians, it was found that ChatGPT 3.5 provided incorrect information about some politicians' birthplaces, such as Hillary Clinton and John F. Kennedy Jr. Additionally, in January last year, the NewsGuard platform revealed that the chatbot generated false and misleading narratives on important topics such as COVID-19 and the Russian aggression in Ukraine. Recently, the Reuters Institute tested the responses of chatbots (ChatGPT-4, Google Gemini, and Perplexity.ai) to basic questions about the 2024 European Parliament elections. Among the correct answers, there were also partially correct or entirely false ones. Therefore, ChatGPT warns: “ChatGPT may make mistakes. Verify important information.”

Similar hallucinations have appeared in AI-generated images. Following the onset of the Hamas-Israel conflict, images claiming to depict scenes from the conflict began circulating online, including those generated by AI. One such image showed two doctors performing surgery in a destroyed building, with noticeable distortions and an illogical context.

However, this is not the only AI-generated image containing hallucinations that was shared. Other examples include a photo of two children praying for their deceased mother, a man with five children among the rubble, and images of children amidst the ruins. These images were published on social media platforms in Albanian, using emotional elements to make them appear credible and encourage their spread.

Synthetic Disinformation: Deepfakes

As AI blurs the line between fact and fiction, there is a noticeable increase in disinformation campaigns using deepfake videos, which can manipulate public opinion and negatively impact democratic processes.

One of the primary applications of Deep Neural Networks (Deep Learning) is the production of synthetic data. Every day, new AI models gain the ability to synthetically create images, videos, sounds, and music. These models make it possible to generate synthetic data that is indistinguishable from reality.

Artificial Intelligence has opened new dimensions in creating fake audio and video materials. Deepfake, as synthetic media, is a technique used to replace faces, voices, and expressions in videos, making individuals appear as if they are saying or doing something they never did. This technology also enables the generation of audio and video that looks and sounds authentic.

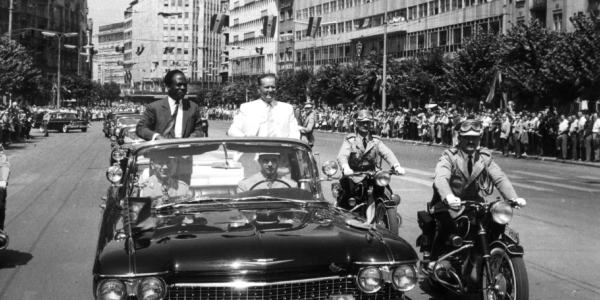

Images of the the Pope in a white coat and those of Donald Trump’s arrest were among the first cases that highlighted the phenomenon of AI-generated images. On social media, several deepfake contents have been published, such as a video of Kosovo’s Prime Minister, Albin Kurti speaking in Arabic, or Elon Musk speaking in Albanian. These were primarily shared on TikTok with content in Albanian.

AI-generated misinformation tends to have a stronger emotional pull, as seen with the release of a deepfake video featuring Liridona Murseli with synthesized voice and an emotional script. These types of content have influenced public opinion due to the interactions they provoke.

To identify AI hallucinations, consider the overall aspect of AI-generated text, such as the use of strange words, irrelevant or poorly contextualized inclusions. For images and videos, attention should be focused on sudden changes in lighting, unusual facial movements, or strange background distortions that may indicate AI creation. When examining the source, check if it comes from a reliable media outlet or an unknown page. Additionally, if a post seems too strange to be true, search on Google to determine if it is genuine or merely a viral AI-generated content.

The Role of Media in Preventing Disinformation

Informative media can play a crucial role in pre-bunking, a technique designed to help people identify and resist manipulative content. By alerting and equipping the public with skills to recognize and reject deceptions, these messages assist people in developing resilience against future manipulations.

According to KMSHK (Press Council of Kosovo) guidelines, media outlets can use artificial intelligence to create media content, but they must do so responsibly. In the process of using AI, media should protect personal data, ensure information security, and avoid discrimination. The editorial responsibility for AI-generated content remains with the media, and it does not exempt journalists and editors from editing standards.

Media should clearly inform readers about the use of AI in content creation, specifying the segment and type of AI used, as well as how it functions. The use of AI must respect copyright laws. Readers should be informed in advance if their interactions are with AI or with humans. For decisions made by AI that may concern readers, media should provide the option to communicate with a human and the right to contest the AI-generated decisions.

The article was prepared by Sbunker as part of the project “Strengthening Community Resilience against Disinformation” supported through the Digital Activism Program by TechSoup Global.